In this week, I had spent a few nights to build a low-latency DASH server that can stream live video in a way that conforms to the newly published LL-DASH specification. The server was built completely using open source tools. I also wrote some integration code to glue the different pieces together. In this article, I’m going to show how I built and tested the server.

In this March, the DASH Industry Forum (DASH-IF) published a new change request to the DASH IOP guidelines on low-latency modes for DASH live streaming (LL-DASH). This standard extension is based on the earlier work on DVB low latency DASH. It also proposes several important new features such as Resync elements for fast stream joining, service description for describing how players should operate under low latency mode, as well as the implementation details and recommended settings for video encoders, origin servers, CDNs and players. The full specification is available here. The proposed changes have been implemented in ffmpeg and dash.js player. Akamai has created a sample stream for public testing, it is playable with dash.js.

Overview

First, I’ll provide an overview of what I have done. On the server side, I used ffmpeg to ingest a RTMP source stream from the OBS studio, and to output LL-DASH manifest and media segments. The output DASH stream is saved to my local disk. To handle player requests and deliver the stream with low latency, I also used a node.js GPAC server called node-gpac-dash. Node-gpac-dash was developed around 2015/2016 for DVB-DASH low-latency streaming. However it has most features that I need. It has a web server which can handle player requests, it can serve directly “pullable” contents such as, DASH mpd file and init segments to the player. For video segments which require pushing to the player using HTTP Chunked Transfer Encoding (CTE), node-gpac-dash will read segments chunk by chunk and send to the player.

Since node-gpac-dash is intended for DVB-DASH low latency, I had to make a few changes to integrate it with the new LL-DASH standard and the ffmpeg specific implementation. For example, the original node-gpac-dash assumes each LL-DASH segment ends with an EODS box that signals segment end. This non-standard box is only carried in media segments generated by the GPAC packager — MP4Box. When node-gpac-dash detects EODS box while reading a video segment, it ends the ongoing chunked transfer by sending out a zero-length HTTP chunk to the player. Since EODS is a non-standard method, ffmpeg does not support it. Instead of using EODS box, I chose to add a new command line option when running node-gpac-dash to indicate the number of video chunks in a video segment. By indicating chunks/segment, node-gpac-dash would easily know when the end of a segment is reached. I implemented this change in my modified node-gpac-dash code, and it worked well. However, you will also need 1 line change in FFmpeg to integrate with node-gpac-dash, I will get into this later in this article.

On the player side, dash.js has also implemented support for the new LL-DASH standard, I simply use it without further change to play the low-latency stream. I tested my implementation on my local MacBook. I was able to achieve a ~4 seconds latency from glass to glass (including video capturing and encoding in OBS, LL-DASH content generation and output in ffmpeg, and stream delivery to the player).

In the rest of this article, I’m going to provide all the details including how to configure OBS to generate proper live source, the command options to run ffmpeg for ingesting and outputting LL-DASH content, what specific changes I have made to node-gpac-server to perform LL-DASH streaming, and the test results and analysis. A prerequisite before running all the steps is to synchronize your computer with a standard time server, using for example NTPD. Dash.js player synchronzies its time with http://time.akamai.com/?iso&ms.

Use OBS to generate RTMP live source

I used OBS studio to capture webcam video and to generate the live RTMP source stream. Here is how I configured OBS.

- First, open OBS studio, click on the “settings” button, the setting window will pop up.

- Click on the “Stream” setting, select “Custom” for “Service”.

- Enter “rtmp://[ffmpeg_listening_ip]:[ffmpeg_listening_port]/live/” into “Server”. This is the ffmpeg ingest endpoint. I choose to use RTMP for live ingest, but please feel free to use other protocols, such as RTP, or WebRTC if you prefer encoders other than OBS. “[ffmpeg_listening_ip]:[ffmpeg_listening_port]” is the IP and port where ffmpeg listens for the incoming stream. [ffmpeg_listening_port] should be 1935 for RTMP by default. Also, please make sure your OBS can reach ffmpeg using the IP/port combination.

- Enter “[your_stream_key]” into “Stream key”. My ffmpeg ingests live source at “rtmp://192.168.50.165:1935/live/app”.

- Click “Output”, on the output sub-window, check “Enable Advanced Encoder Settings” box, some additional encoder settings will show up. I entered “keyint=120” into “Custom Encoder Settings”. This will have OBS to generate 1 key frame for every 120 encoded frames. For a frame rate of 30fps, it means GOP (Group Of Pictures) size of 4 seconds. Why do I need to configure this? It is because ffmpeg complained about unstable segment duration caused by variable key frame interval. For low-latency DASH streaming using segment template, players are very sensitive to segment duration variation, so I had to specify a key frame interval to enforce constant segment duration.

- Finally, for accurately measuring the end to end latency, I had to burn the current timestamp into the video. Please follow this page for how to do this.

Alternatively, you can also use OBS to stream a local video file in a loop as a live input source. I followed this instruction to set this up, https://streamshark.io/obs-guide/looping-video, it worked well for me. After you are done with all the above configurations, please do not click the “Start Streaming” button right away. You have to run ffmpeg first to listen for the incoming RTMP stream.

Use ffmpeg to ingest live source and generate LL-DASH stream

First, you will need one line change to FFmpeg. In libavformat/dashenc.c, in function dash_write_packet, find the following line,

snprintf(os->temp_path, sizeof(os->temp_path), use_rename ? “%s.tmp” : “%s”, os->full_path);

The above line tells FFmpeg to write partial DASH segments to a temporary file ending with “.tmp” extension, e.g., “chunk-stream0–00001.m4s.tmp”. This temporary file is renamed without the “.tmp” extension when the segment is fully generated, e.g., “chunk-stream0–00001.m4s”. The file renaming can lead to a race condition when the modified node-gpac-dash tries to read the last chunk of a segment at about the same time, and this could crash node-gpac-dash. To avoid the crash, I chose to remove the “.tmp” extension, so that FFmpeg always outputs to the same file no matter it is partially or fully generated, e.g., “chunk-stream0–00001.m4s”. The new line would look like,

snprintf(os->temp_path, sizeof(os->temp_path), use_rename ? “%s” : “%s”, os->full_path);

You will need to rebuild FFmpeg after making this change.

FFmpeg provides a ldash option. However, using ldash alone will not generate an LL-DASH stream, it has to come together with a bunch of other options. Below is the full FFMPEG command I used,

“ffmpeg -f flv -listen 1 -i rtmp://192.168.50.165:1935/live/app -c:v h264 -force_key_frames “expr:gte(t,n_forced*4)” -profile:v baseline -an -map v:0 -s:0 320x180 -map v:0 -s:1 384x216 -ldash 1 -streaming 1 -use_template 1 -use_timeline 0 -adaptation_sets “id=0,streams=v id=1,streams=a” -seg_duration 4 -frag_duration 1 -frag_type duration -utc_timing_url “https://time.akamai.com/?iso” -window_size 15 -extra_window_size 15 -remove_at_exit 1 -f dash /usr/local/var/www/ldash/1.mpd”.

On the ingest side, the input source is a RTMP stream, so I used “-f flv”, “-listen 1” and “-i rtmp://192.168.50.165:1935/live/app”. “-f flv” tells ffmpeg to expect a RTMP (flv) stream. “-listen 1” tells ffmpeg to operate as a RTMP server. “-i rtmp://192.168.50.165:1935/live/app” specifies the RTMP ingest endpoint.

We can re-transcode the input source into multiple resolutions and bitrates. The transcoding is done by adding a set of “-map” options followed by the video resolution and bitrate.

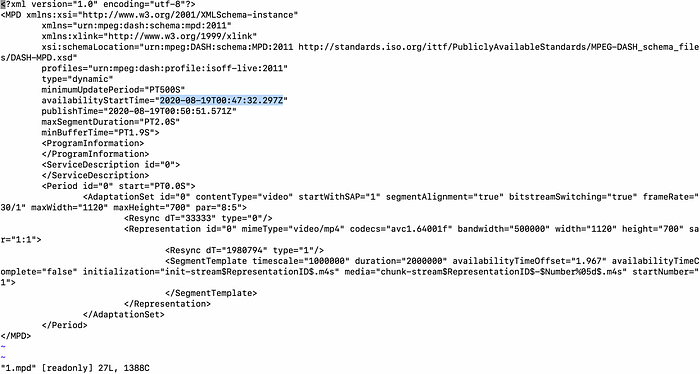

Next, the series of options from “-ldash” to the end are for low latency DASH output. “-ldash 1” indicates DASH low latency mode. “-streaming 1” must be specified to use chunked streaming mode for the output. “-use_template = 1” and “-use_timeline 0” must be specified to use segment template to publish segments, otherwise segment timeline will be used which is not supported by LL-DASH. “-seg_duration 4” is set for ffmpeg to use 4 seconds segments. Since I have GOP size configured to 4 seconds in OBS, ffmpeg will package 1 GOP per segment. By default, ffmpeg sets LL-DASH chunk duration to 1 frame/chunk. You may also want to choose your chunk duration. This can be done by using for example “-frag_duration 1 -frag_type duration” option, this will set chunk duration to 1 second. Depending on the segment duration and chunk duration you choose, you can calculate the number of chunks/per segment by dividing segment duration by chunk duration. For example, given 4 seconds segment duration and 1 second chunk duration, each segment then has 4 chunks in it. You will need to specify chunks/segment when you start node-gpac-dash. “-remove_at_exit 1” tells ffmpeg to remove all the DASH output after the process exits, so I don’t need to remove the file manually every time I run ffmpeg. I also added “-window_size 15 -extra_window_size 15” to specify the DASH time-shift buffer depth (the live sliding window) and delete any segments that fall out of the window automatically. This can avoid exhausting your disk space when the server run on your local machine for a long time. To make the stream standard-compliant, you should also specify a timing server as the time source used to timestamp your LL-DASH media segments and manifest. This can be done using the “-utc_timing_url” option, e.g., “-utc_timing_url https://time.akamai.com/?iso”. On the player side, it is recommended that the same time source is used, such that the client is accurately synchronized with the server. For example, Dash.js also uses “https://time.akamai.com/?iso” as the time source. Finally, “-f dash /usr/local/var/www/ldash/1.mpd” specifies the output format and path. For this demo, I chose to write the stream to a local path. FFmpeg will write each segment to the disk in chunked (progressive) manner. You can also upload to a remote path, such as AWS s3, by using “-f dash -method PUT -chunked_post 1 -http_persistent 1 http://your_bucket.amazonaws.com/“. The http method for uploading can be “PUT” or “POST”. ”-chunked_post” is used for chunked uploading, AWS S3 supports chunked POST as far as I know, but I have never tried it. “-http_persistent 1“ is specified to use HTTP persistent connection for uploading. You can find more configuration options on the ffmpeg format documentation. The LL-DASH specification also provides recommended ffmpeg options and parameters for LL-DASH streaming. The following figure shows the content of the DASH mpd file,

After you run the full ffmpeg command, please go back to OBS, and click on “Start Streaming”. Ffmpeg will soon start to ingest, repackage and output LL-DASH stream data. Since I choose to write the stream data to my NGINX web root directory, the stream is now ready to play in dash.js player with regular latency. However, it is not ready to stream it with low latency. I still need to run the modified node-gpac-dash to enable low-latency mode with chunked transfer. In the next section, I will show how to do that.

Use the modified node-gpac-dash to perform chunked transfer

My modified node-gpac-dash code can be found here. First, check out the code, copy gpac-dash.js to one level up of the folder where ffmpeg writes the LL-DASH media segments to. For example, my ffmpeg writes DASH data to “/usr/local/var/www/ldash”, so I copied gpac-dash.js to “/usr/local/var/www/” and ran it from there. Next, run “node gpac-dash.js -chunk-media-segments -cors -chunks-per-segment 4” to start the LL-DASH server. “-chunk-media-segments” must be specified to perform chunked transfer, “-cors” is specified to allow cross-origin. “-chunks-per-segment” specifies the number of chunks in a segment. You will need to use the number of chunks per segment you have used when running FFmpeg. Now I have OBS, ffmpeg and gpac-dash.js all running on my MacBook, these are all I need for running a LL-DASH server. Next, it is time to play the stream with dash.js player.

Use dash.js to play the LL-DASH stream

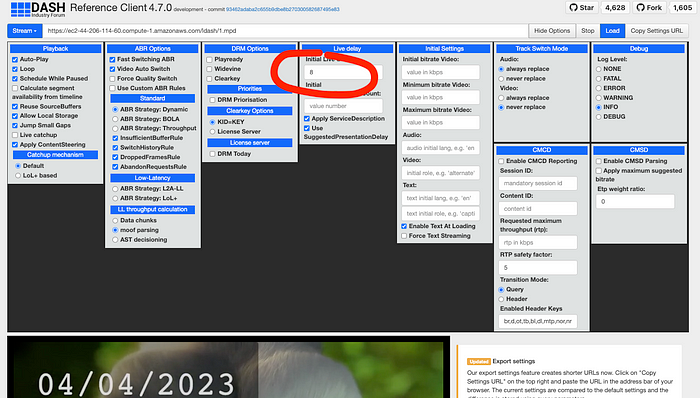

Dash.js has all the support of the latest LL-DASH standard, I didn’t make further change to it. I just opened the latest dash.js player (v3.1.2 as of today) in my browser, and entered the stream URL, “http://192.168.50.165:8000/ldash/1.mpd”. Remember to click “Show Options” and check “low latency mode” box. Finally, click “Load” to start playing. When the video shows up, compare the timestamp in the video to your current local time from time.is. I found there was a 4 seconds difference, that was my glass to glass delay (Figure 2). At the end of each segment download, node-gpac-dash printed the following line, “end of file reading (ldash/chunk-stream0–00028.m4s) in 3969 ms at UTC 1597419864611”. This means node-gpac-dash serves the segment chunk after chunk in a total of 3969 ms, this matches the duration of the segment transferred. For regular latency DASH, the player downloads segments as fast as the bandwidth allows, the download time would be much shorter.

Considering that I run everything from my local machine, 4 seconds delay sounds a bit long for low-latency streaming, but it is still much lower than regular DASH live. Earlier this month, I experimented the community low-latency HLS technology on AWS Interactive Video Service (IVS) using a slightly different setting (OBS capturing from local machine, RTMP ingest into AWS, and playback using a AWS IVS sample player from local machine), the delay was 5 seconds but the server runs in AWS.

As I measured, it took 2.5~3 seconds from when OBS started capturing and when ffmpeg received the first video frame. This is the ingest delay. By using a faster encoder and real-time ingest protocol such as RTP, I see a possibility of reducing the ingest delay from 2.5~3 seconds to be around 0.5 second, this potentially leads to a glass to glass delay of around 1.5~2 seconds. One more optimization I can do is to save the media segments in memory instead of writing to disk, that may save another several hundred milliseconds.

OK, that is all about my work on this topic. If you have any question, please reach out to me directly at maxutility2011@gmail.com.

Update — April 2023

If you experience unstable streaming using Dash.js, it is probably caused by high network jitter between your client and server. You might want to set an “initial delay” in Dash.js as follows,

Or, you can set “-target_latency 8” in the FFmpeg command, e.g.,

ffmpeg -f flv -listen 1 -i rtmp://0.0.0.0:1935/live/app -c:v h264 -force_key_frames “expr:gte(t,n_forced*4)” -r 30 -profile:v baseline -an -map v:0 -s:0 768x432 -b:v:0 949k -maxrate 1557k -bufsize 1557k -map v:0 -s:1 1024x576 -b:v:1 1854k -maxrate 2982K -bufsize 2982K -ldash 1 -target_latency 8 -streaming 1 -use_template 1 -use_timeline 0 -adaptation_sets “id=0,streams=v” -seg_duration 4 -frag_duration 1 -frag_type duration -utc_timing_url “https://time.akamai.com/?iso" -window_size 15 -extra_window_size 15 -remove_at_exit 1 -f dash /home/ubuntu/contents/ldash/1.mpd